Much has been said about the longevity of OLAP. Here’s how ActiveViam proved it is here to stay

For the past few years, I have encountered a few thought pieces detailing the death of OLAP, like holistic.io’s The Rise and Fall of the OLAP Cube.

But, like other technologies marked for death, I doubt the death of OLAP is at all imminent. OLAP has left an indelible mark on how data practitioners think about, structure, and report on their data. And indeed, the rise of OLAP was very much due to the nature of the data generated and captured as part of OLTP, and was optimized for the way one would want to analyze such captured data.

In fact, much of the cries announcing the death of OLAP are less about OLAP itself, and more about the OLAP cubes and its data structure. Even aforementioned holistic.io walked back their proclamation with their OLAP != OLAP cube piece. The data storage landscape has changed, and with it, so has OLAP workloads. When coupled with current advancements in memory and storage, and with a solution in hand for how to handle continual data updates, OLAP’s future remains bright.

A History of OLAP

OLAP, or On-Line Analytical Processing, was first coined in 1993 in Edgar F. Codd’s white paper Providing OLAP to User-Analysts: An IT Mandate. Underlying OLAP is the concept of a multidimensional cube or hypercube, with dimensions–or categorical axes–and measures–numeral facts and computations. While coining the term OLAP, Codd’s paper caused a bit of a scandal: his 12 rules for OLAP perfectly aligned with the premise of Arbor Software’s Essbase system, and it was revealed Arbor Software paid Codd to write the paper. Regardless, by then the evolution of the concept was well underway.

The basis of On-Line Analytical Processing began in the 1960s, as Kenneth Iverson defined APL–A Programming Language–which centered on multidimensional arrays and their functions and operations. From there, we saw the release of Express and Visicalc in the 1970s, the first multidimensional tool and the first spreadsheet product, respectively. In the 1980s, Microsoft introduced its now ever-present spreadsheet product Excel, which would quickly include a pivot table feature–perhaps the most popular example of multidimensional data exploration.

Also in the late 1970s, Donald D. Chamberlin and Raymond F. Boyce developed SQL at IBM to manage queries in relational databases based primarily on an earlier paper of Codd’s titled A Relational Model of Data for Large Shared Data Banks.

In the modern Business World

The longevity of OLAP stems from its evolution as business needs changed. We saw the evolution of OLAP into ROLAP (relational OLAP, where data is stored and accessed from relational databases), MOLAP (multidimensional OLAP, where instead of multiple fact tables, the data is stored in one large multidimensional table), HOLAP (hybrid of the aforementioned OLAPs), and others.

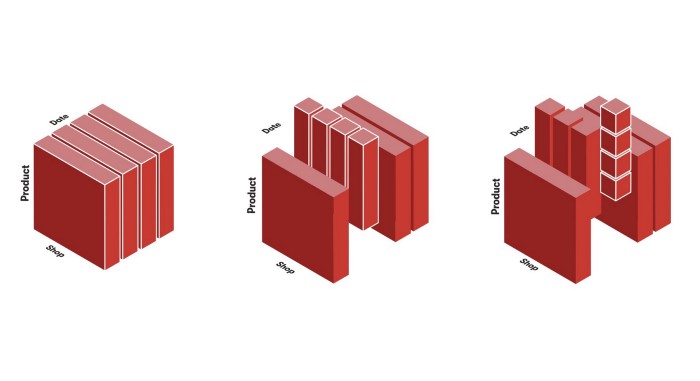

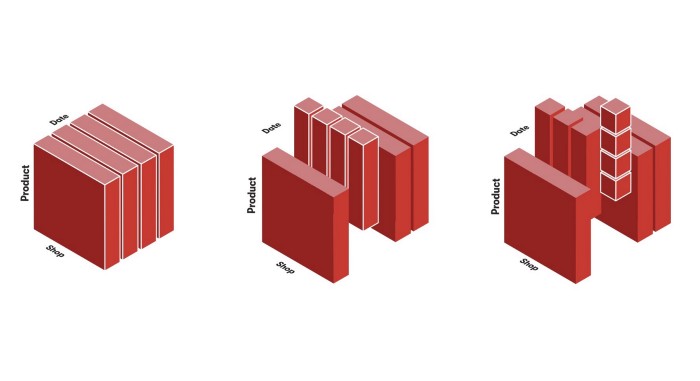

Each On-Line Analytical Processing framework endeavored to address complications around data storage and loading, providing business users the ability to derive insights from their data. Underlying historical or transactional data would be gathered from their respective stores and loaded into an OLAP cube where some number of aggregations have occurred. Once loaded, the business user could quickly slice, dice, or drill down, without having to wait any further.

Of course, the rise of big data threatened to complicate that. Essbase, one of the leading OLAP products of the 1990s, found it would struggle in systems with too many dimensions, and ultimately needed to retool, having users define standard dense and sparse dimensions plus additional attribute dimensions so data storage could be optimized. As we started collecting and generating vast amounts of data, we saw the switch from row to columnar data stores, where one could easily add a new dimension without having to rebuild tables. This development, with its ability to easily increase the number of dimensions or potential dimensions, threatened OLAP and its practicality–each additional dimension would require a potential re-aggregation of all numerical facts.

Coupled with this exploding dimensionality was the increased desire for “real time” or “near real time” analytics. As processing power increased, the idea of waiting days or a week for transactional data to be queried and loaded into a cube was unacceptable, and it began to look plausible that one could directly query the underlying data and create reports or derive insights on the fly.

The Atoti difference

For OLAP as a concept, much of the drawbacks cited above are more a hit on the historical application of the cube, and not the concept itself. On-Line Analytical Processing, at its heart, is about optimizing the ability to study multidimensional data, pre-forming and computing the appropriate measures and dimensions to provide business insights, quickly–a traditional storage system like SQL would struggle to do so without a lot of work.

Since its 2005 inception, ActiveViam defined and achieved an ambitious vision: it would solve the fast business insights problem, beginning with the original ActivePivot product, a MOLAP solution. Over the course of fifteen years, ActiveViam refined its premise: instead of building a new custom aggregation engine for each business case, build something business agnostic, where the data modeling was in the user’s hand, but was also capable of handling changing or updated data. Inspired by the financial sector, ActiveViam ensured the solution would be capable of handling non-linear computations like Value at Risk.

With memory and processing becoming cheap (thanks, Gordon!) multiprocessor computing became the obvious choice forward, enabling sub-second queries. ActiveViam leveraged columnar storage to decrease memory usage and bitmap indices to easily manage up to several hundred dimensions, and built an intuitive UI experience to handle the large dimensionality. Adding in the ability to load incremental data post modeling allowed the cube data to be kept up to date. Taken together, these breakthroughs yielded a product capable of responsively analyzing complex business cases on up to date data, keeping pace with a business user’s needs for au courant metrics.

As ActiveViam succeeded in its vision, it pivoted to access: the modern user wanted control, the product needed to also be available directly, in addition to hosted system offerings. And though built on a Java framework, to ensure easy adaptability by a wide audience, Python was the chosen language for configuration.

Thus we arrive at Atoti. Available to the public for free, Atoti is a Python library which demonstrates the powerful solution built over the years, and continues to grow as the landscape changes.

Conclusion

Is OLAP dead? Despite the hype, OLAP isn’t dead. The first generation tools had their issues and limitations, but multidimensional analysis is here to stay, and the desire for it to be as near to real time is as pressing as ever. The relatively recent launches of Clickhouse (2015) and Apache Kylin (2015) proves the appetite for OLAP remains. Like many other technologies, On-Line Analytical Processing continues to evolve to respond to the changing landscape, and ActiveViam is paving the way.

Sources

https://www.holistics.io/blog/the-rise-and-fall-of-the-olap-cube/

https://wisdomschema.com/molap-rolap/

https://olap.com/learn-bi-olap/olap-business-intelligence-history/

https://olap.com/types-of-olap-systems/

https://datacadamia.com/db/essbase/attribute_dimensions

https://datacadamia.com/db/essbase/standard_dimensions

https://docs.oracle.com/cd/E12825_01/epm.111/esb_dbag/frameset.htm?dattrib.htm

https://1library.net/document/zl5n6ngq-essbase-history-1-pdf.html

https://cs.ulb.ac.be/public/_media/teaching/infoh419/database_explosion_report.pdf

https://en.wikipedia.org/wiki/ClickHouse